graph neural networks (GNNs)

What are graph neural networks (GNNs)?

Graph neural networks (GNNs) are a type of neural network architecture and deep learning method that can help users analyze graphs, enabling them to make predictions based on the data described by a graph's nodes and edges.

Graphs signify relationships between data points, also known as nodes. These nodes represent a subject -- such as a person, object or place -- and the edges represent the relationships between the nodes. Graphs can consist of an x-axis and a y-axis, origins, quadrants, lines, bars and other elements.

Typically, machine learning (ML) and deep learning algorithms are trained with simple data types, which makes understanding graph data complex and difficult. In addition, some graphs are more complex and have unordered nodes, while others don't have a fixed form.

GNNs are designed to process graph data -- specifically, structural and relational data. They are flexible and can understand complex data relationships, which is something that traditional ML, deep learning and neural networks can't do.

This article is part of

What is generative AI? Everything you need to know

Different branches of science, industry and research that store data in graph databases can use GNNs. Organizations might use GNNs for graph and node classification, as well as node, edge and graph prediction tasks. GNNs excel at finding patterns and relationships between data points.

How do GNNs work?

Graphs are unstructured, meaning that they can be any size or contain any kind of data, such as images or text.

GNNs use a process called message passing to organize graphs in a form that ML algorithms can understand. In this process, each node is embedded with data about the node's location and its neighboring nodes. An artificial intelligence (AI) model can then find patterns and make predictions based on the embedded data.

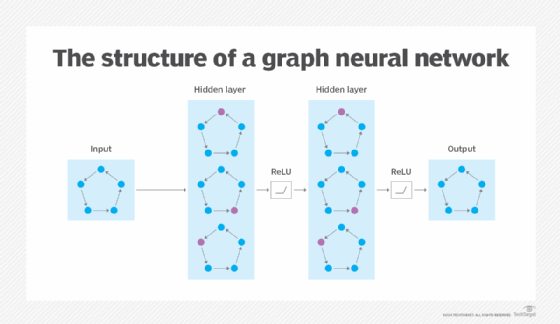

GNNs are constructed using three basic main layers: an input layer, a hidden layer and an output layer. The input layer takes in the graph data, which is typically a matrix or a list of matrices. The hidden layer processes the data, and the output layer creates the GNN's output response.

The process also uses a rectified linear unit (ReLU), which is an activation function normally used in deep learning models and convolutional neural networks (CNNs). The ReLU function introduces a nonlinear property to the model and interprets the value provided as the input.

GNN models are typically trained using traditional neural network training methods, such as backpropagation or transfer learning, but are structured specifically for training with graph data.

Types of graph neural networks

GNNs are typically classified as the following types:

- Graph convolutional networks (GCNs). GCNs learn features through inspecting nearby nodes. They consist of graph convolutions and a linear layer, and use a nonlinear activation function.

- Recurrent graph neural networks (RGNNs). RGNNs learn diffusion patterns in multirelational graphs that have multiple relations.

- Spatial graph convolutional networks. Spatial GCNs define convolutional layers that are meant for information passing and grouping operations. They aggregate data of nearby nodes and edges to a specific node to update its hidden embedding.

- Spectral graph convolutional networks. Spectral GCNs are based on graph signal filters. They define the spectral domain of data based on a mathematical transformation called the graph Fourier transform.

- Recurrent neural networks (RNNs). RNNs are a type of artificial neural network that uses sequential or time series data. The output of an RNN is dependent on prior sequence elements.

- Graph autoencoder networks. These learn graph representations that reconstruct input graphs by using an encoder and decoder.

Applications of graph neural networks

GNNs can be used in a variety of tasks, including the following:

- Natural language processing (NLP). GNNs can be used in NLP tasks that require reading graphs. This includes tasks such as text classification, semantics, relation extraction and question answering.

- Computer vision. GNNs are applicable in tasks such as image classification.

- Node classification. Node classification predicts the node embedding for each node.

- Link prediction. This examines the relationship between two data points in a graph to determine if the two points are connected.

- Graph classification. Graph classification categorizes graphs into groups to identify them.

- Graph visualization. This process finds the structures and anomalies present in graph data to help users understand a graph.

For more information on generative AI-related terms, read the following articles:

What is the Fréchet Inception Distance (FID)?

How do GNNs differ from traditional neural networks?

Graph neural networks are comparable to other types of neural networks, but are more specialized to handle data in the form of graphs. This is because graph data -- which often consists of unstructured data and unordered nodes, and might even lack a fixed form -- can be more difficult to process in other comparable neural networks.

While a traditional neural network is designed to process data as vectors and sequences, graph neural networks can process global and local data in the form of graphs, letting GNNs handle tasks and queries in graph databases.

CNNs vs. GNNs and why CNNs don't work on graphs

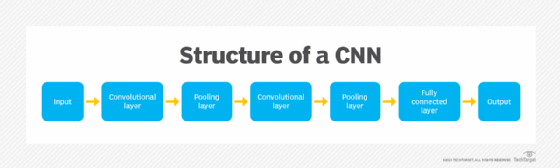

A CNN is a category of ML model and deep learning algorithm that's well suited to analyzing visual data sets. CNNs use principles from linear algebra, particularly convolution operations, to extract features and identify patterns within images. CNNs are predominantly used to process images, but can also work with audio and other signal data. They're used in fields including healthcare, automotive, retail and social media, and in virtual assistants.

Although CNNs and GNNs are both types of neural networks, and CNNs can also analyze visual data, it's computationally challenging for CNNs to process graph data. Graph topology is generally too arbitrary and complicated for CNNs to handle.

CNNs are designed to operate specifically with structured data, while GNNs can operate using structured and unstructured data. GNNs can identify and work equally well on isomorphic graphs, which are graphs that might be structurally equivalent, but the edges and vertices differ. CNNs, by contrast, can't act identically on flipped or rotated images, which makes CNNs less consistent.

Example uses of graph neural networks

Graph neural networks are used in the following fields:

- Chemistry and protein folding. Chemists can use GNNs to research the graph structure of molecules and chemical compounds. For example, AlphaFold, developed by DeepMind, a subsidiary of Alphabet Inc., is an AI program that uses GNNs to make accurate predictions about a protein's structure.

- Social networks. GNNs are used in social media to develop recommendation systems based on both social and other item relations.

- Cybersecurity. A network of computers can be viewable as a graph, making GNNs ideal for detecting anomalies on individual nodes.

GNNs are a useful architecture found in neural networks. Learn more about how neural networks compare with machine learning.